To understand the Web in the broadest and deepest sense, to

fully partake of the vision that I and my colleagues share, one

must understand how the Web came to be.

—Tim Berners-Lee, Weaving the Web

Internet Vs Web

“Internet” and “Web”are not synonyms. The Internet is a networking protocol (set of rules) that allows computers of all types to connect to and communicate with other computers on the Internet. Is the world’s largest computer network, made up of hundreds millions of computers. It’s really nothing more than the “plumbing” that allows information of various kinds to flow from computer to computer around the world.

The World Wide Web (Web), on the other hand, is a software protocol that runs on top of the Internet, allowing users to easily access files stored on Internet computers. Is one of many interfaces to the Internet, making it easy to retrieve text, pictures, and multimedia files from computers without having to know complicated commands. You just click a link and voila: You miraculously see a page displayed on your browser screen.

The Web is only about 20 years old, whereas the Internet is 50-something. Prior to the Web, omputers could communicate with one another, but the interfaces weren’t as slick or easy to use as the Web. Many of these older interfaces, or “protocols,” are still around and offer many different, unique ways of communicating with other computers (and other people).

Other Internet protocols and interfaces include:

The Internet’s origins trace back to a project sponsored by the U.S. Defense Advanced Research Agency (DARPA) in 1969 as a means for researchers and defense contractors to share information (Kahn, 2000).

The Web was created in 1990 by Tim Berners-Lee, a computer programmer working for the European Organization for Nuclear Research (CERN).

The World Wide Web (Web), on the other hand, is a software protocol that runs on top of the Internet, allowing users to easily access files stored on Internet computers. Is one of many interfaces to the Internet, making it easy to retrieve text, pictures, and multimedia files from computers without having to know complicated commands. You just click a link and voila: You miraculously see a page displayed on your browser screen.

The Web is only about 20 years old, whereas the Internet is 50-something. Prior to the Web, omputers could communicate with one another, but the interfaces weren’t as slick or easy to use as the Web. Many of these older interfaces, or “protocols,” are still around and offer many different, unique ways of communicating with other computers (and other people).

Other Internet protocols and interfaces include:

- Forums & Bulletin Boards

- Internet Mailing Lists

- Newsgroups

- Peer-to-Peer file sharing systems, such as Napster and Gnutella

- Databases accessed via Web interfaces

The Internet’s origins trace back to a project sponsored by the U.S. Defense Advanced Research Agency (DARPA) in 1969 as a means for researchers and defense contractors to share information (Kahn, 2000).

The Web was created in 1990 by Tim Berners-Lee, a computer programmer working for the European Organization for Nuclear Research (CERN).

Prior to the Web, accessing files on the Internet was a challenging task, requiring specialized knowledge and skills. The Web made it easy to retrieve a wide variety of files, including text, images, audio, and video by the simple mechanism of clicking a hypertext link.

Hypertext

A system that allows computerized objects (text, images, sounds, etc.) to be linked together. A hypertext link points to a specific object, or a specific place with a text; clicking the link opens the file associated with the object.

How the Internet Came to Be

- Up until the mid-1960s, most computers were stand-alone machines that did not connect to or communicate with other computers.

- In 1962 J.C.R. Licklider, a professor at MIT, wrote a paper envisioning a globally connected “Galactic Network” of computers (Leiner, 2000). The idea was far-out at the time, but it caught the attention of Larry Roberts, a project manager at the U.S. Defense Department’s Advanced Research Projects Agency (ARPA).

- In 1966 Roberts submitted a proposal to ARPA that would allow the agency’s numerous and disparate computers to be connected in a network similar to Licklider’s Galactic Network. Roberts’ proposal was accepted, and work began on the “ARPANET,” which would in time become what we know as today’s Internet.

- The first “node” on the ARPANET was installed at UCLA in 1969 and

- gradually, throughout the 1970s, universities and defense contractors working on ARPA projects began to connect to the ARPANET.

- In 1973 the U.S. Defense Advanced Research Projects Agency (DARPA) initiated another research program to allow networked computers to communicate transparently across multiple linked networks. Whereas the ARPANET was just one network, the new project was designed to be a “network of networks.” According to Vint Cerf, widely regarded as one of the “fathers” of the Internet, “ This was called the Internetting project and the system of networks which emerged from the research was known as the ‘Internet’ ” (Cerf, 2000).

- It wasn’t until the mid 1980s, with the simultaneous explosion in use of personal computers, and the widespread adoption of a universal standard of Internet communication called Transmission Control Protocol/Internet Protocol (TCP/IP), that the Internet became widely available to anyone desiring to connect to it. Other government agencies fostered the growth of the Internet by contributing communications “backbones” that were specifically designed to carry Internet traffic.

- By the late 1980s, the Internet had grown from its initial network of a few computers to a robust communications network supported by governments and commercial enterprises around the world.

- Despite this increased accessibility, the Internet was still primarily a tool for academics and government contractors well into the early 1990s.

- As more and more computers connected to the Internet, users began to demand tools that would allow them to search for and locate text and other files on computers anywhere on the Net.

Early Net Search Tools

Although sophisticated search and information retrieval techniques date back to the late 1950s and early ‘60s, these techniques were used primarily in closed or proprietary systems.

Early Internet search and retrieval tools lacked even the most basic capabilities, primarily because it was thought that traditional information retrieval techniques would not work well on an open, unstructured information universe like the Internet. Accessing a file on the Internet was a two-part process.

Early Internet search and retrieval tools lacked even the most basic capabilities, primarily because it was thought that traditional information retrieval techniques would not work well on an open, unstructured information universe like the Internet. Accessing a file on the Internet was a two-part process.

- First, you needed to establish direct connection to the remote computer where the file was located using a terminal emulation program called Telnet.

- Then you needed to use another program, called a File Transfer Protocol (FTP) client, to fetch the file itself.

- the address of the computer and

- the exact location and name of the file you were looking for

Telnet

A terminal emulation program that runs on your computer, allowing you to access a remote computer via a TCP/IP network and execute commands on that computer as if you were directly connected to it. Many libraries offered telnet access to their catalogs.

File Transfer Protocol (FTP)

A set of rules for sending and receiving files of all types between computers connected to the Internet.

Thus, “search” often meant:

- sending a request for help to an e-mail message list or discussion forum and hoping some kind soul would respond with the details you needed to fetch the file you were looking for.

- The situation improved somewhat with the introduction of “anonymous” FTP servers, which were centralized file-servers specifically intended for enabling the easy sharing of files. The servers were anonymous because they were not password protected—anyone could simply log on and request any file on the system.

Files on FTP servers were organized in hierarchical directories, much like files are organized in hierarchical folders on personal computer systems today.

The hierarchical structure made it easy for the FTP server to display a directory listing of all the files stored on the server, but you still needed good knowledge of the contents of the FTP server.If the file you were looking for didn’t exist on the FTP server you were logged into, you were out of luck.

The first true search tool for files stored on FTP servers was called Archie, created in 1990 by a small team of systems administrators and graduate students at McGill University in Montreal.Archie was the prototype of today’s search engines, but it was primitive and extremely limited compared to what we have today. Archie roamed the Internet searching for files available on anonymous FTP servers, downloading directory listings of every anonymous FTP server it could find. These listings were stored in a central, searchable database called the Internet Archives Database at McGill University, and were updated monthly. Although it represented a major step forward, the Archie database was still extremely primitive, limiting searches to a specific file name, or for computer programs that performed specific functions. Nonetheless, it proved extremely popular—nearly 50 percent of Internet traffic to Montreal in the early ’90s was Archie related, according to Peter Deutsch, who headed up the McGill University Archie team. The team licensed Archie to

others, with the first shadow sites launched in Australia and Finland in 1992. The Archie network reached a peak of 63 installations around the world by 1995. A legacy Archie server is still maintained active for historic purposes at University of Warsaw.

“In the brief period following the release of Archie, there was an explosion of Internet-based research projects, including WWW, Gopher, WAIS, and others” (Deutsch, 2000). “Each explored a different area of the Internet information problem space, and each offered its own insights into how to build and deploy Internet-based services,” wrote Deutsch.

Gopher, an alternative to Archie, was created by Mark McCahill and his team at the University of Minnesota in 1991 and was named for the university’s mascot, the Golden Gopher.

Gopher essentially combined the Telnet and FTP protocols, allowing users to click hyperlinked menus to access information on demand without resorting to additional commands.

Using a series of menus that allowed the user to drill down through successively more specific categories, users could ultimately access the full text of documents, graphics, and even music files, though not integrated in a single format. Gopher made it easy to browse for information on the Internet.

According to Gopher creator McCahill, “Before Gopher there wasn’t an easy way of having the sort of big distributed system where there were seamless pointers between stuff on one machine and another machine. You had to know the name of this machine and if you wanted to go over here you had to know its name. “Gopher takes care of all that stuff for you. So navigating around Gopher is easy. It’s point and click typically. So it’s something that anybody could use to find things. It’s also very easy to put information up so a lot of people started running servers themselves and it was the first of the easy-to-use, no muss, no fuss, you can just crawl around and look for information tools. It was the one that wasn’t written for techies.” Gopher’s “no muss, no fuss” interface was an early precursor of what later evolved into popular Web directories like Yahoo!. “Typically you set this up so that you can start out with a sort of overview or general structure of a bunch of information, choose the items that you’re interested in to move into a more specialized area and then either look at items by browsing around and finding some documents or submitting searches,” said McCahill.

A problem with Gopher was that it was designed to provide a listing of files available on computers in a specific location—the University of Minnesota, for example. While Gopher servers were searchable, there was no centralized directory for searching all other computers that were both using Gopher and connected to the Internet, or “Gopherspace” as it was called.

In November 1992, Fred Barrie and Steven Foster of the University of Nevada System Computing Services group solved this problem, creating a program called Veronica, a centralized Archie-like search tool for Gopher files.

In November 1992, Fred Barrie and Steven Foster of the University of Nevada System Computing Services group solved this problem, creating a program called Veronica, a centralized Archie-like search tool for Gopher files.

In 1993 another program called Jughead added keyword search and Boolean operator capabilities to Gopher search.

Keyword

A word or phrase entered in a query form that a search system attempts to match in text documents in its database.

Boolean

A system of logical operators (AND, OR, NOT) that allows true-false operations to be performed on search queries, potentially narrowing or expanding results when used with keywords

Popular legend has it that Archie, Veronica and Jughead were named after cartoon characters. Archie in fact is shorthand for “Archives.” Veronica was likely named after the cartoon character (she was Archie’s girlfriend), though it’s officially an acronym for “Very Easy Rodent-Oriented Net-Wide Index to Computerized Archives.” And Jughead (Archie and Veronica’s cartoon pal) is an acronym for “Jonzy’s Universal Gopher Hierarchy Excavation and Display,” after its creator, Rhett “Jonzy” Jones, who developed the program while at the University of Utah Computer Center.

A third major search protocol developed around this time was Wide Area Information Servers (WAIS). Developed by Brewster Kahle and his colleagues at Thinking Machines, WAIS worked much like today’s metasearch engines. The WAIS client resided on your local machine, and allowed you to search for information on other Internet servers using natural language, rather than using computer commands. The servers themselves were responsible for interpreting the query and returning appropriate results, freeing the user from the necessity of learning the specific query language of each server. WAIS used an extension to a standard protocol called Z39.50 that was in wide use at the time. In essence, WAIS provided a single computer-to-computer protocol for searching for information. This information could be text, pictures, voice, or formatted documents. The quality of the search results was a direct result of how effectively each server interpreted the WAIS query.

All of the early Internet search protocols represented a giant leap over the awkward access tools rovided by Telnet and FTP. Nonetheless, they still dealt with information as discrete data objects. And these protocols lacked the ability to make connections between disparate types of information—text, sounds, images, and so on—to form the conceptual links that transformed raw data into useful information.

Although search was becoming more sophisticated, information on the Internet lacked popular appeal. In the late 1980s, the Internet was still primarily a playground for scientists, academics, government agencies, and their contractors.

Fortunately, at about the same time, a software engineer in Switzerland was tinkering with a program that eventually gave rise to the World Wide Web. He called his program Enquire Within Upon Everything, borrowing the title from a book of Victorian advice that provided helpful information on everything from removing stains to investing money.

Fortunately, at about the same time, a software engineer in Switzerland was tinkering with a program that eventually gave rise to the World Wide Web. He called his program Enquire Within Upon Everything, borrowing the title from a book of Victorian advice that provided helpful information on everything from removing stains to investing money.

Enquire Within Upon Everything

“Suppose all the information stored on computers everywhere were linked, I thought. Suppose I could program my computer to create a space in which anything could be linked to anything. All the bits of information in every computer at CERN, and on the planet, would be available to me and to anyone else. There would be a single, global information space.

“Once a bit of information in that space was labeled with an address, I could tell my computer to get it. By being able to reference anything with equal ease, a computer could represent associations between things that might seem

unrelated but somehow did, in fact, share a relationship. A Web of information would form.”

— Tim Berners-Lee, Weaving the Web

The Web was created in 1990 by Tim Berners-Lee, who at the time was a contract programmer at the Organization for Nuclear Research (CERN) high-energy physics laboratory in Geneva, Switzerland. The Web was a side project Berners-Lee took on to help him keep track of the mind-boggling diversity of people, computers, research equipment, and other resources that are de rigueur at a massive research institution like CERN. One of the primary challenges faced by CERN scientists was the very diversity that gave it strength. The lab hosted thousands of researchers every year, arriving from countries all over the world, each speaking different languages and working with unique computing systems. And since high-energy physics research projects tend to spawn huge amounts of experimental data, a program that could simplify access to information and foster collaboration was something of a Holy Grail.

Berners-Lee had been tinkering with programs that allowed relatively easy, decentralized linking capabilities for nearly a decade before he created the Web.

- He had been influenced by the work of Vannevar Bush, who served as Director of the Office of Scientific Research and Development during World War II. In a landmark paper called “As We May Think,” Bush proposed a system he called MEMEX, “a device in which an individual stores all his books, records, and communications, and which is mechanized so that it may be consulted with exceeding speed and flexibility” (Bush, 1945). The materials stored in the MEMEX would be indexed, of course, but Bush aspired to go beyond simple search and retrieval. The MEMEX would allow the user to build conceptual “trails” as he moved from document to document, creating lasting associations between different components of the MEMEX that could be recalled at a later time. Bush called this “associative indexing ... the basic idea of which is a provision whereby any item may be caused at will to select immediately and automatically another. This is the essential feature of the MEMEX. The process of tying two items together is the important thing.”

- In Bush’s visionary writings, it’s easy for us to see the seeds of what we now call hypertext. But it wasn’t until 1965 that Ted Nelson actually described a computerized system that would operate in a manner similar to what Bush envisioned. Nelson called his system “hypertext” and described the next-generation MEMEX in a system he called Xanadu. Nelson’s project never achieved enough momentum to have a significant impact on the world.

- Another twenty years would pass before Xerox implemented the first mainstream hypertext program, called NoteCards, in 1985.

- A year later, Owl Ltd. created a program called Guide, which functioned in many respects like a contemporary Web browser, but lacked Internet connectivity. Bill Atkinson, an Apple Computer programmer best known for creating MacPaint, the first bitmap painting program, created the first truly popular hypertext program in 1987. His HyperCard program was specifically for the Macintosh, and it also lacked Net connectivity. Nonetheless, the program proved popular, and the basic functionality and concepts of hypertext were assimilated by Microsoft, appearing first in standard help systems for Windows software.

Weaving the Web

The foundations and pieces necessary to build a system like the World Wide Web were in place well before Tim Berners-Lee began his tinkering.

But unlike others before him, Berners-Lee’s brilliant insight was that a simple form of hypertext, integrated with the universal communication protocols offered by the Internet, would create a platform-independent system with a uniform interface for any computer connected to the Internet.

He tried to persuade many of the key players in the hypertext industry to adopt his ideas for connecting to the Net, but none were able to grasp his vision of simple, universal connectivity.

So Berners-Lee set out to do the job himself, creating a set of tools that collectively became the prototype for the World Wide Web (Connolly, 2000). In a remarkable burst of energy, Berners-Lee began work in October 1990 on the first Web client—the program that allowed the creation, editing, and browsing of hypertext pages. He called the client WorldWideWeb, after the mathematical term used to describe a collection of nodes and links in which any node can be linked to any other. “Friends at CERN gave me a hard time, saying it would never take off—especially since it yielded an acronym that was nine syllables long when spoken,” he wrote in Weaving the Web.

- To make the client simple and platform independent, Berners-Lee created HTML, or HyperText Markup Language, which was a dramatically simplified version of a text formatting language called SGML(Standard Generalized Markup Language). All Web documents formatted with HTML tags would display identically on any computer in the world.

- Next, he created the HyperText Transfer Protocol (HTTP), the set of rules that computers would use to communicate over the Internet and allow hypertext links to automatically retrieve documents regardless of their location. He also devised the Universal Resource Identifier, a standard way of giving documents on the Internet a unique address (what we call URLs today).

- Finally, he brought all of the pieces together in the form of a Web server, which stored HTML documents and served them to other computers making HTTP requests for documents with URLs.

- Berners-Lee completed his work on the initial Web tools by Christmas 1990.

Berners-Lee began actively to promote the Web outside of the lab, attending conferences and articipating in Internet mailing and discussion lists. Slowly, the Web began to grow as more and more people implemented clients and servers around the world. There were really two seminal events that sparked the explosion in popular use of the Web.

- The first was the development of graphical Web browsers, including ViolaWWW, Mosaic, and others that integrated text and images into a single browser window. For the first time, Internet information could be displayed in a visually appealing format previously limited to CD-ROM-based multimedia systems. This set off a wave of creativity among Web users, establishing a new publishing medium that was freely available to anyone with Internet access and the basic skills required to design a Web page.

- Then in 1995, the U.S. National Science Foundation ceased being the central manager of the core Internet communications backbone, and transferred both funds and control to the private sector. Companies were free to register “dot-com” domain names and establish an online presence. It didn’t take long for business and industry to realize that the Web was a powerful new avenue for online commerce, triggering the dot-com gold rush of the late 1990s.

Early Web Navigation

The Web succeeded where other early systems failed to catch on largely because of its decentralized nature.

Despite the fact that the first servers were at CERN, neither Berners-Lee nor the lab exercised control over who put up a new server anywhere on the Internet.

Despite the fact that the first servers were at CERN, neither Berners-Lee nor the lab exercised control over who put up a new server anywhere on the Internet.

Anyone could establish his or her own Web server. The only requirement was to link to other servers, and inform other Web users about the new server so they could in turn create links back to it.

But this decentralized nature also created a problem. Despite the ease with which users could navigate from server to server on the Web simply by clicking links, navigation was ironically becoming more difficult as the Web grew. No one was “in charge” of the Web; there was no central authority to create and maintain an index of the growing number of available documents.

To facilitate communication and cross-linkage between early adopters of the Web, Berners-Lee established a list Web of servers that could be accessed via hyperlinks. This was the first Web directory. This early Web guide is still online

Beyond the list of servers at CERN, there were few centralized directories, and no global Web search services. People notified the world about new Web pages in much the same way they had previously

announced new Net resources, via e-mail lists or online discussions.

Eventually, some enterprising observers of the Web began creating lists of links to their favorite sites. John Makulowich, Joel Jones, Justin Hall, and the people at O’Reilly & Associates publishing company were among the most noted authors maintaining popular link lists.

Eventually, many of these link lists started “What’s New” or “What’s Cool” pages, serving as de facto announcement services for new Web pages. But they relied on Web page authors to submit information, and the Web’s relentless growth rate ultimately made it impossible to keep the lists either current or comprehensive.

What was needed was an automated approach to Web page discovery and indexing. The Web had now grown large enough that information scientists became interested in creating search services specifically for the Web. Sophisticated information retrieval techniques had been available since the early 1960s, but they were only effective when searching closed, relatively structured databases. The open, laissez-faire nature of the Web made it too messy to easily adapt traditional information retrieval techniques. New, Web-centric approaches were needed. But how best to approach the problem? Web search would clearly have to be more sophisticated than a simple Archie-type service. But should these new “search engines” attempt to index the full text of Web documents, much as earlier Gopher tools had done, or simply broker requests to local Web search services on individual computers, following the WAIS model?

What was needed was an automated approach to Web page discovery and indexing. The Web had now grown large enough that information scientists became interested in creating search services specifically for the Web. Sophisticated information retrieval techniques had been available since the early 1960s, but they were only effective when searching closed, relatively structured databases. The open, laissez-faire nature of the Web made it too messy to easily adapt traditional information retrieval techniques. New, Web-centric approaches were needed. But how best to approach the problem? Web search would clearly have to be more sophisticated than a simple Archie-type service. But should these new “search engines” attempt to index the full text of Web documents, much as earlier Gopher tools had done, or simply broker requests to local Web search services on individual computers, following the WAIS model?

The First Search Engines

Tim Berners-Lee’s vision of the Web was of an information space where data of all types could be freely accessed. But in the early days of the Web, the reality was that most of the Web consisted of simple HTML text documents. Since few servers offered local site search services, developers of the first Web search engines opted for the model of indexing the full text of pages stored on Web servers. To adapt traditional information retrieval techniques to Web search, they built huge databases that attempted to replicate the Web, searching over these relatively controlled, closed archives of pages rather than trying to search the Web itself in real time.

With this fateful architectural decision, limiting search engines to HTML text documents and essentially ignoring all other types of data available via the Web, the Invisible Web was born.The biggest challenge search engines faced was simply locating all of the pages on the Web. Since the Web lacked a centralized structure, the only way for a search engine to find Web pages to index was by following links to pages and gathering new links from those pages to add to the queue to visit for indexing. This was a task that required computer assistance, simply to keep up with all of the new pages being added to the Web each day.

But there was a subtler problem that needed solving. Search engines wanted to fetch and index all pages on the Web, but the search engines frequently revisited popular pages at the expense of new or obscure pages, because popular pages had the most links pointing to them—which the crawlers naturally followed. What was needed was an automated program that had a certain amount of intelligence, able to recognize when a link pointed to a previously indexed page and ignoring it in favor of finding new pages.These programs became known as Web robots—“autonomous agents” that could find their way around the Web discovering new Web pages. Autonomous is simply a fancy way of saying that the agent programs can do things on their own without a person directly controlling them, and that they have some degree of intelligence, meaning they can make decisions and take action based on these decisions.

In June 1993 Mathew Gray, a physics student at MIT, created the first widely recognized Web robot, dubbed the “World Wide Web Wanderer.”Gray’s interest was limited to determining the size of the Web and tracking its continuing growth. The Wanderer simply visited Web pages and reported on their existence, but didn’t actually fetch or store pages in a database. Nonetheless, Gray’s robot led the way for more sophisticated programs that would both visit and fetch Web pages for storage and indexing in search engine databases.

The year 1994 was a watershed one for Web search engines. Brian Pinkerton, a graduate student in Computer Sciences at the University of Washington, created a robot called WebCrawler in January 1994.Pinkerton created his robot because his school friends were always sending him e-mails about the cool sites they had found on the Web, and Pinkerton didn’t have time to surf to find sites on his own—he wanted to “cut to the chase” by searching for them directly. WebCrawler went beyond Gray’s Wanderer by actually retrieving the full text of Web documents and storing them in a keyword-searchable database. Pinkerton made WebCrawler public in April 1994 via a Web interface. The database contained entries from about 6,000 different servers, and after a week was handling 100+ queries per day. The first Web search engine was born. The image evoked by Pinkerton’s robot “crawling” the Web caught the imagination of programmers working on automatic indexing of the Web. Specialized search engine robots soon became known generically as “crawlers” or “spiders,” and their page-gathering activity was called “crawling” or “spidering” the Web.

Crawler-based search engines proliferated in 1994. Many of the early search engines were the result of academic or corporate research projects. Two popular engines were the World Wide Web Worm, created by Oliver McBryan at the University of Colorado, and WWW JumpStation, by Jonathon Fletcher at the University of Stirling in the U.K.

Neither lasted long: Idealab purchased WWWWorm and transformed it into the first version of the GoTo search engine. JumpStation simply faded out of favor as two other search services launched in 1994 gained popularity: Lycos and Yahoo!.

Michael Mauldin and his team at the Center for Machine Translation at Carnegie Mellon University created Lycos (named for the wolf spider, Lycosidae lycosa, which catches its prey by pursuit, rather than in a web). Lycos quickly gained acclaim and prominence in the Web community, for the sheer number of pages it included in its index (1.5 million documents by January 1995) and the quality of its search results. Lycos also pioneered the use of automated abstracts of documents in search results, something not offered by WWW Worm or JumpStation.

Also in 1994, two graduate students at Stanford University created “Jerry’s Guide to the Internet,” built with the help of search spiders, but consisting of editorially selected links compiled by hand into a hierarchically organized directory. In a whimsical acknowledgment of this structure, Jerry Wang and David Filo renamed their service “Yet Another Hierarchical Officious Oracle,” commonly known today as Yahoo!.

In 1995 Infoseek, AltaVista, and Excite made their debuts, each offering different capabilities for the searcher.

Metasearch engines—programs that searched several search engines simultaneously—also made an appearance this year.SavvySearch, created by Daniel Dreilinger at Colorado State University, was the first metasearch engine, and MetaCrawler, from the University of Washington, soon followed. From this point on, search engines began appearing almost every day.

As useful and necessary as they were for finding documents, Web search engines all shared a common weakness: They were designed for one specific task—to find and index Web documents, and to point users to the most relevant documents in response to keyword queries.During the Web’s early years, when most of its content consisted of simple HTML pages, search engines performed their tasks admirably. But the Internet continued to evolve, with information being made available in many formats other than simple text documents. For a wide variety of reasons, Web search services began to fall behind in keeping up with both the growth of the Web and in their ability to recognize and index non-text information—what we refer to as the Invisible Web.

To become an expert searcher, you need to have a thorough understanding of the tools at your disposal and, even more importantly, when to use them.Now that you have a sense of the history of the Web and the design philosophy that led to its universal adoption, let’s take a closer look at contemporary search services, focusing on their strengths but also illuminating their weaknesses.

Information Seeking on the Visible Web

The creators of the Internet were fundamentally interested in solving a single problem: how to connect isolated computers in a universal network, allowing any machine to communicate with any other regardless of type or location.

The network protocol they developed proved to be an elegant and robust solution to this problem, so much so that a myth emerged that the Internet was designed to survive a nuclear attack

(Hafner and Lyon, 1998). In solving the connectivity problem, however, the Net’s pioneers largely ignored three other major problems—problems that made using the Internet significantly challenging to all but the most skilled computer users.

- The first problem was one of incompatible hardware. Although the TCP/IP network protocol allows hardware of virtually any type to establish basic communications, once a system is connected to the network it may not be able to meaningfully interact with other systems. Programs called emulators” that mimic other types of hardware are often required for successful communication.

- The second problem was one of incompatible software. A computer running the UNIX operating system is completely different from one running a Windows or Macintosh operating system. Again, translation programs were often necessary to establish communication.

- Finally, even if computers with compatible hardware and software connected over the Internet, they still often encountered the third major problem: incompatible data structures. Information can be stored in a wide variety of data structures on a computer, ranging from a simple text file to a complex “relational” database consisting of a wide range of data types.

HTML would also display documents in virtually identical format regardless of the type of hardware or software running on either the computer serving the page or the client computer viewing the document. To meet this goal, HTML was engineered as a very simple, barebones language. Although Berners-Lee foresaw Web documents linking to a wide range of disparate data types, the primary focus was on text documents. Thus the third problem—the ability to access a wide range of data types—was only partially solved.

The simplicity of the point and click interface also has an Achilles’ heel. It’s an excellent method for browsing through a collection of related documents—simply click, click, click, and documents are displayed with little other effort. Browsing is completely unsuitable and inefficient, however, for searching through a large information space.

To understand the essential differences between browsing and searching, think of how you use a library.

If you’re familiar with a subject, it’s often most useful to browse in the section where books about the subject are shelved. Because of the way he library is organized, often using either the Dewey Decimal or Library of Congress Classification system, you know that all of the titles in the section are related, and often leads to unexpected discoveries that prove quite valuable.

If you’re unfamiliar with a subject, however, browsing is both inefficient and potentially futile if you fail to locate the section of the library where the material you’re interested in is shelved. Searching, using the specialized tools offered by a library’s catalog, is far more likely to provide satisfactory results.

To understand the essential differences between browsing and searching, think of how you use a library.

If you’re familiar with a subject, it’s often most useful to browse in the section where books about the subject are shelved. Because of the way he library is organized, often using either the Dewey Decimal or Library of Congress Classification system, you know that all of the titles in the section are related, and often leads to unexpected discoveries that prove quite valuable.

If you’re unfamiliar with a subject, however, browsing is both inefficient and potentially futile if you fail to locate the section of the library where the material you’re interested in is shelved. Searching, using the specialized tools offered by a library’s catalog, is far more likely to provide satisfactory results.

Using the Web to find information has much in common with using the library.Sometimes browsing provides the best results, while other information needs require nothing less than sophisticated, powerful searching to achieve the best results.We look at browsing and searching—the two predominant methods for finding information on the Web. In examining the strengths and weaknesses of each approach, you’ll understand how general-purpose information-seeking tools work—an essential foundation for later understanding why they cannot fully access the riches of the Invisible Web.

Browsing vs. Searching

There are two fundamental methods for finding information on the Web: browsing and searching.

- Browsing is the process of following a hypertext trail of links created by other Web users. A hypertext link is a pointer to another document, image, or other object on the Web. The words making up the link are the title or description of the document that you will retrieve by clicking the link. By its very nature, browsing the Web is both easy and intuitive.

- Searching, on the other hand, relies on powerful software that seeks to match the keywords you specify with the most relevant documents on the Web. Effective searching, unlike browsing, requires learning how to use the search software as well as lots of practice to develop skills to achieve satisfactory results.

When the Web was still relatively new and small, browsing was an adequate method for locating relevant information. Just as books in a particular section of a library are related, links from one document tend to point to other documents that are related in some way. However, as the Web grew in size and diversity, the manual, time-intensive nature of browsing from page to page made locating relevant information quickly and efficiently all but impossible. Web users were crying out for tools that could help satisfy their information needs.

Search tools using two very different methods emerged to help users locate information on the Web.

- One method, called a Web directory, was modeled on early Internet search tools like Archie and Gopher.

- The other method, called a search engine, drew on classic information retrieval techniques that had been widely used in closed, proprietary databases but hardly at all in the open universe of the Internet.

Web directories are similar to a table of contents in a book; search engines are more akin to an index.

Like a table of contents, a Web directory uses a hierarchical structure to provide a high level overview of major topics. Browsing the table of contents allows the reader to quickly turn to interesting sections of a book by examining the titles of chapters and subchapters. Browsing the subject-oriented categories and subcategories in a Web directory likewise leads to categories pointing to relevant Web documents.

In both cases, however, since the information is limited to brief descriptions of what you’ll find, you have no assurance that what you’re looking for is contained in either the section of the book or on a specific Web page. You must ultimately go directly to the source and read the information yourself to decide.

A book’s index offers a much finer level of granularity, providing explicit pointers to specific keywords or phrases regardless of where they appear in the book. Search engines are essentially full-text indexes of Web pages, and will locate keywords or phrases in any matching documents regardless of where they are physically located on the Web.

From a structural standpoint, a directory has the form of a hierarchical graph, with generic top-level categories leading to increasingly more specific subcategories as the user drills down the hierarchy by clicking hypertext links. Ultimately, at the bottom-most node for a branch of the hierarchical graph, the user is presented with a list of document titles hand-selected by humans as the most appropriate for the subcategory.

Search engines have no such hierarchical structure. Rather, they are fulltext indexes of millions of Web pages, organized in an inverted index structure. Whenever a searcher enters a query, the entire index is searched, and a variety of algorithms are used to find relationships and compute statistical correlations between keywords and documents. Documents judged to have the most “relevance” are presented first in a result list.

Relevance

The degree to which a retrieved Web document matches a user’s query or information need. Relevance is often a complex calculation that weighs many factors, ultimately resulting in a score that’s expressed as a percentage value.

Browsing and searching both play important roles for the searcher. By design, browsing is the most efficient way to use a directory, and searching with keywords or phrases is the best way to use a search engine. Unfortunately, the boundaries between what might be considered a “pure” directory or search engine are often blurred. For example,

- most directories offer a search form that allows you to quickly locate relevant categories or links without browsing.

- Similarly, most search engines offer access to directory data provided by a business partner that’s independent of the actual search engine database.

- Muddying the waters further, when you enter keywords into a search engine, results are presented in a browsable list.

These ambiguities notwithstanding, it’s reasonably easy to tell the difference between a Web directory and a search engine—and it’s an important distinction to make when deciding what kind of search tool to use for a particular information need. Let’s take a closer look at Web directories and search engines, examining how they’re compiled and how they work to provide results to a searcher.

Web Directories

Web directories, such as Yahoo!, LookSmart, and the Open Directory Project (ODP) are collections of links to Web pages and sites that are arranged by subject. They are typically hierarchical in nature, organized into a structure that classifies human knowledge by topic, technically known as ontology. These ontologies often resemble the structure used by traditional library catalog systems, with major subject areas divided into smaller, more specific subcategories.

Directories take advantage of the power of hypertext, creating a clickable link for each topic, subtopic, and ultimate end document, allowing the user to successively drill down from a broad subject category to a narrow subcategory or specific document. This extensively linked structure makes Web directories ideal tools for browsing for information. To use another real-world metaphor,

directories are similar to telephone yellow pages, because they are organized by category or topic, and often contain more information than bare-bones white pages listings.

How Web Directories Work

The context and structure provided by a directory’s ontology allows its builders to be very precise in how they categorize pages. Many directories annotate their links with descriptions or comments, so you can get an idea of what a Web site or page is about before clicking through and examining the actual page a hyperlink points to.

There are two general approaches to building directories.

- The closed model used by Yahoo!, LookSmart, and NBCi relies on a small group of employees, usually called editors, to select and annotate links for each category in the directory’s ontology. The expertise of directory editors varies, but they are usually subject to some sort of quality-control mechanism that assures consistency throughout the directory.

- The open model, as epitomized by the Open Directory Project, Wherewithal, and other less well known services, relies on a cadre of volunteer editors to compile the directory. Open projects tend to have more problems with quality control over time, but since they cost so little to compile and are often made freely available to anyone wishing to use their data, they have proven popular with the Web community at large.

Most Web directories offer some type of internal search that allows the user to bypass browsing and get results from deep within the directory by using a keyword query. Remember, however, that directories consist only of links and annotations. Using a directory’s search function searches the words making up these links and annotations, not the full-text of Web documents they point to, so it’s possible that your results will be incomplete or omit potentially good matches.

Since most directories tend to be small, rarely larger than one to two million links, internal results are often supplemented with additional results from a general-purpose search engine. These supplemental results are called “fall-through” or “fall-over” results. Usually, supplemental results are differentiated from directory results in some way. For example, a search on Yahoo! typically returns results labeled “Web Sites” since Yahoo! entries almost always point to the main page of a Web site. Fall-through results are labeled “Web Pages” and are provided by Yahoo!’s search partner.

Different search engines power fall-through results for other popular Web directories. For example, MSN and About.com use fall-through results provided by Inktomi, while LookSmart uses AltaVista results.

When a directory search fails to return any results, fall-through results from a search engine partner are often presented as primary results.

In general, Web directories are excellent resources for getting a highlevel overview of a subject, since all entries in a particular category are “exposed” on the page (rather than limited to an arbitrary number, such as 10 to 20, per page).

Issues with Web Directories

Because their scope is limited and links have been hand-selected, directories are very powerful tools for certain types of searches.

However, no directory can provide the comprehensive coverage of the Web that a search engine can. Here are some of the important issues to consider when deciding whether to use a Web directory for a particular type of search.

Directories are inherently small. It takes time for an editor to find appropriate resources to include in a directory, and to create meaningful annotations. Because they are hand compiled, directories are by nature much smaller than most search engines. This size limitation has both positives and negatives for the searcher.

- On the positive side, directories typically have a narrow or selective focus. A narrow or selective focus makes the searcher’s job easier by limiting the number of possible options. Most directories have a policy that requires editors to perform at least a cursory evaluation of a Web page or site before including a link to it in the directory, in theory assuring that only hyperlinks to the highest quality content are included. Directories also generally feature detailed, objective, succinct annotations, written by a person who has taken the time to evaluate and understand a resource, so they often convey a lot of detail. Search engine annotations, on the other hand, are often arbitrarily assembled by software from parts of the page that may convey little information about the page.

- On the negative side, Web directories are often arbitrarily limited, either by design or by extraneous factors such as a lack of time, knowledge, or skill on the part of the editorial staff.

Though most directories strive to provide objective coverage of the Web, it’s important to be vigilant for any signs of bias and the quality of resources covered.Timeliness. Directory links are typically maintained by hand, so upkeep and maintenance is a very large issue. The constantly changing, dynamic nature of the Web means that sites are removed, URLs are changed, companies merge—all of these events effectively “break” links in a directory. Link checking is an important part of keeping a directory up to date, but not all directories do a good job of frequently verifying the links in the collection.

Directories are also vulnerable to “bait and switch” tactics by Webmasters. Such tactics are generally used only when a site stands no chance of being included in a directory because its content violates the directory’s editorial policy. Adult sites often use this tactic, for example, by submitting a bogus “family friendly” site, which is evaluated by editors. Once the URL for the site is included in the directory, the “bait” site is taken down and replaced with risqué content that wouldn’t have passed muster in the first place. The link to the site (and its underlying URL) remains the same, but the site’s content is entirely different from what was originally submitted by the Webmaster and approved by the directory editor. It’s a risky tactic, since many directories permanently ban sites that they catch trying to fool them in this manner.Unfortunately, it can be effective, as the chicanery is unlikely to be discovered unless users complain.

Lopsided coverage. Directory ontologies may not accurately reflect a balanced view of what’s available on the Web. For the specialized directories, this isn’t necessarily a bad thing. However, lopsided coverage in a general-purpose directory is a serious disservice to the searcher. Some directories have editorial policies that mandate a site being listed in a particular category even if hundreds or thousands of other sites are included in the category. This can make it difficult to find

resources in these “overloaded” categories.

Examples of huge, lopsided categories include Yahoo!’s “Business” category and the Open Directory Project’s “Society” category.The opposite side of this problem occurs when some categories receive little or no coverage, whether due to editorial neglect or simply a lack of resources to assure comprehensive coverage of the Web.

Charging for listings. There has been a notable trend toward charging Webmasters a listing fee to be included in many directories. While most businesses consider this a reasonable expense, other organizations or individuals may find the cost to be too great. This “pay to play” trend almost certainly excludes countless valuable sites from the directories that abide by this policy.

Search Engines

Search engines are databases containing full-text indexes of Web pages. When you use a search engine, you are actually searching this database of retrieved Web pages, not the Web itself. Search engine databases are finely tuned to provide rapid results, which is impossible if the engines were to attempt to search the billions of pages on the Web in real time.

Search engines are similar to telephone white pages, which contain simple listings of names and addresses. Unlike yellow pages, which are organized by category and often include a lot of descriptive information about businesses, white pages provide minimal, bare bones information. However, they’re organized in a way that makes it very easy to look up an address simply by using a name like “Smith” or “Veerhoven.”Search engines are compiled by software “robots” that voraciously suck millions of pages into their indices every day. When you search an index, you’re trying to coax it to find a good match between the keywords you type in and all of the words contained in the search engine’s database. In essence, you’re relying on a computer to essentially do simple pattern-matching between your search terms and the words in the index. AltaVista, HotBot, and Google are examples of search engines.

How Search Engines Work

Search engines are complex programs. In a nutshell, they consist of several distinct parts:

- The Web crawler (or spider), which finds and fetches Web pages

- The indexer, indexes every word on every page and stores the resulting index of words in a huge database

- The query processor, which compares your search query to the index and recommends the best possible matching documents

Myth: All Search Engines Are Alike

Search engines vary widely in their comprehensiveness, currency, and coverage of the Web. Even beyond those factors, search engines, like people, have “personalities,” with strengths and weaknesses, admirable traits, and irritating flaws. By turns search engines can be stolidly reliable and exasperatingly flaky. And just like people, if you ask for something outside their area of expertise, you’ll get the electronic equivalent of a blank stare and a resounding “huh?”

A huge issue is the lack of commonality between interfaces, syntax, and capabilities. Although they share superficial similarities, all search engines are unique both in terms of the part of the Web they have indexed, and how they process search queries and rank results. Many searchers make the mistake of continually using their “favorite” engine for all searches. Even if they fail to get useful results, they’ll keep banging away, trying different keywords or otherwise trying to coax an answer from a system that simply may not be able to provide it. A far better approach is to spend time with all of the major search engines and get to know how they work, what types of queries they handle better than others, and generally what kind of “personality” they have. If one search engine doesn’t provide the results you’re looking for, switch to another. And most important of all, if none of the engines seem to provide reasonable results, you’ve just got a good clue that what you’re seeking is likely to be located on the Invisible Web—if, in fact, it’s available online at all.

WEB CRAWLERS

Web crawlers have the sole mission of finding and retrieving pages on the Web and handing them off to the search engine’s indexers. It’s easy to imagine a Web crawler as a little sprite scuttling across the luminous strands of cyberspace, but in reality Web crawlers do not traverse the Web at all. In fact, crawlers function much like your Web browser, by sending a request to a Web server for a Web page, downloading the entire page, then handing it off to the search engine’s indexer.

Crawlers, of course, request and fetch pages much more quickly than you can with a Web browser. In fact most Web crawlers can request hundreds or even thousands of unique pages simultaneously. Given this power, most crawlers are programmed to spread out their requests for pages from individual servers over a period of time to avoid over-whelming the server or consuming so much bandwidth that human users are crowded out. Crawlers find pages in two ways.

- Most search engines have an “add URL” form, which allows Web authors to notify the search engine of a Web page’s address. In the early days of the Web, this method for alerting a search engine to the existence of a new Web page worked well—the crawler simply took the list of all URLs submitted and retrieved the underlying pages.Unfortunately, spammers figured out how to create automated bots that bombarded the add URL form with millions of URLs pointing to spam pages. Most search engines now reject almost 95 percent of all URLs submitted through their add URL forms. It’s likely that, over time, most search engines will phase-out their add URL forms in favor of the second method that crawlers can use to discover pages—one that’s more easy to control.

- This second method of Web page discovery takes advantage of the hypertext links embedded in most Web pages. When a crawler fetches a page, it culls all of the links appearing on the page and adds them to a queue for subsequent crawling. As the crawler works its way through the queue, links found on each new page are also added to the queue. Harvesting links from actual Web pages dramatically decreases the amount of spam a crawler encounters, because most Web authors only link to what they believe are high-quality pages.

By harvesting links from every page it encounters, a crawler can quickly build a list of links that can cover broad reaches of the Web. This technique also allows crawlers to probe deep within individual sites, following internal navigation links. In theory, a crawler can discover and index virtually every page on a site starting from a single URL, if the site is well designed with extensive internal navigation links.

Although their function is simple, crawlers must be programmed to handle several challenges. First, since most crawlers send out simultaneous requests for thousands of pages, the queue of “visit soon” URLs must be constantly examined and compared with URLs already existing in the search engine’s index. Duplicates in the queue must be eliminated to prevent the crawler from fetching the same page more than once. If a Web page has already been crawled and indexed, the crawler must determine if enough time has passed to justify revisiting the page, to assure that the most up-to-date copy is in the index. And because crawling is a resource-intensive operation that costs money, most search engines limit the number of pages that will be crawled and indexed from any one Web site.

This is a crucial point—you can’t assume that just because a search engine indexes some pages from a site that it indexes all of the site’s pages.

Because much of the Web is highly connected via hypertext links, crawling can be surprisingly efficient. A May 2000 study published by researchers at AltaVista, Compaq, and IBM drew several interesting conclusions that demonstrate that crawling can, in theory, discover most pages on the visible Web (Broder et al., 2000). The study found that:

- For any randomly chosen source and destination page, the probability that a direct hyperlink path exists from the source to the destination is only 24 percent.

- If a direct hypertext path does exist between randomly chosen pages, its average length is 16 links. In other words, a Web browser would have to click links on 16 pages to get from random page A to random page B. This finding is less than the 19 degrees of separation postulated in a previous study, but also excludes the 76 percent of pages lacking direct paths.

- If an undirected path exists (meaning that links can be followed forward or backward, a technique available to search engine spiders but not to a person using a Web browser), its average length is about six degrees.

- More than 90 percent of all pages on the Web are reachable from one another by following either forward or backward links. This is good news for search engines attempting to create comprehensive indexes of the Web.

These findings suggest that efficient crawling can uncover much of the visible Web.

SEARCH ENGINE INDEXERS

When a crawler fetches a page, it hands it off to an indexer, which stores the full text of the page in the search engine’s database, typically in an inverted index data structure. An inverted index is sorted alphabetically, with each index entry storing the word, a list of the documents in which the word appears, and in some cases the actual locations within the text where the word occurs. This structure is ideally suited to keyword-based queries, providing rapid access to documents containing the desired keywords.

As an example, an inverted index for the phrases “life is good,” “bad or good,” “good love,” and “love of life” would contain identifiers for each phrase (numbered one through four), and the position of the word

within the phrase.

Table 2.2 shows the structure of this index.

When a crawler fetches a page, it hands it off to an indexer, which stores the full text of the page in the search engine’s database, typically in an inverted index data structure. An inverted index is sorted alphabetically, with each index entry storing the word, a list of the documents in which the word appears, and in some cases the actual locations within the text where the word occurs. This structure is ideally suited to keyword-based queries, providing rapid access to documents containing the desired keywords.

As an example, an inverted index for the phrases “life is good,” “bad or good,” “good love,” and “love of life” would contain identifiers for each phrase (numbered one through four), and the position of the word

within the phrase.

Table 2.2 shows the structure of this index.

To improve search performance, some search engines eliminate common words called stop words (such as is, or, and of in the above example). Stop words are so common they provide little or no benefit in narrowing a search so they can safely be discarded. The indexer may also take other performance-enhancing steps like eliminating punctuation and multiple spaces, and may convert all letters to lowercase.

Some search engines save space in their indexes by truncating words to their root form, relying on the query processor to expand queries by adding suffixes to the root forms of search terms. Indexing the full text of Web pages allows a search engine to go beyond simply matching single keywords. If the location of each word is recorded, proximity operators such as NEAR can be used to limit searches. The engine can also match multi-word phrases, sentences, or even larger chunks of text. If a search engine indexes HTML code in addition to the text on the page, searches can also be limited to specific fields on a page, such as the title, URL, body, and so on.

THE QUERY PROCESSOR

The query processor is arguably the most complex part of a search engine. The query processor has several parts, including

The major differentiator of one search engine from another lies in the way relevance is calculated. Each engine is unique, emphasizing certain variables and downplaying others to calculate the relevance of a document as it pertains to a query.

query, and select an engine appropriately.

Just as Web directories have a set of issues of concern to a searcher, so do search engines. Some of these issues are technical; others have to do with choices made by the architects and engineers who create and maintain the engines.

Cost of crawling. Crawling the Web is a resource-intensive operation. The search engine provider must maintain computers with sufficient power and processing capability to keep up with the explosive growth of the Web, as well as a high-speed connection to the Internet backbone. It costs money every time a page is fetched and stored in the search engine’s database. There are also costs associated with query processing, but in general crawling is by far the most expensive part of maintaining a search engine.

Since no search engine’s resources are unlimited, decisions must be made to keep the cost of crawling within an acceptable budgetary range.

Much has been made of these overlooked pages, and many of the major engines are making serious efforts to include them and make their indexes more comprehensive. Unfortunately, the engines have also discovered through their “deep crawls” that there’s a tremendous amount of duplication and spam on the Web.

Current generation crawlers also have little ability to determine the quality or appropriateness of a Web page, or whether it is a page that changes frequently and should be recrawled on a timely basis.

User expectations and skills. Users often have unrealistic expectations of what search engines can do and the data that they contain. Trying to determine the best handful of documents from a corpus of millions or billions of pages, using just a few keywords in a query is almost an impossible task. Yet most searchers do little more than enter simple two- or three-word queries, and rarely take advantage of the advanced limiting and control functions all search engines offer.

Search engines go to great lengths to successfully cope with these woefully basic queries.

Fortunately, increases in both processing power and bandwidth are providing search engines with the capability to use more computationally intensive techniques without sacrificing the need for speed. Unfortunately, the relentless growth of the Web works against improvements in computing power and bandwidth simply because as the Web grows the search space required to fully evaluate it also increases.

Bias toward text. Most current-generation search engines are highly optimized to index text. If there is no text on a page—say it’s nothing but a graphic image, or a sound or audio file—there is nothing for the engine to index. For non-text objects such as images, audio, video, or other streaming media files, a search engine can record, in an Archie-like manner, filename and location details but not much more. While researchers are working on techniques for indexing non-text objects, for the time being non-text objects make up a considerable part of the

Invisible Web.

Search Engines vs. Directories

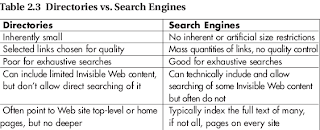

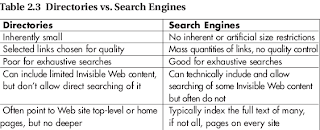

To summarize, search engines and Web directories both have sets of features that can be useful, depending on the searcher’s information need. Table 2.3 compares these primary features.

To summarize, search engines and Web directories both have sets of features that can be useful, depending on the searcher’s information need. Table 2.3 compares these primary features.

Early search engines did a competent job of keeping pace with the

growth of simple text documents on the Web. But as the Web grew, it

increasingly shifted from a collection of simple HTML documents to a

rich information space filled with a wide variety of data types. More

importantly, owners of content stored in databases began offering Web

gateways that allowed user access via the Web. This new richness of

data was beyond the capability of search engine spiders, designed only

to handle simple HTML text pages.

These tools take many forms (targeted directories and focused crawlers, vertical portals (vortals), metasearch engines, value-added search services, “alternative” search tools, and fee-based Web services.), and sometimes they are confused with Invisible Web resources. They are not—they simply extend or enhance the capabilities of search engines and directories that focus on material that’s part of the visible Web.

These alternative tools have both positives and negatives for the searcher. On the plus side, these tools tend to be smaller, and are focused on specific categories or sources of information.

This flexibility also can be perceived as a minus.

Targeted Directories and Focused Crawlers

Focus on a specific subject area or domain of knowledge. Just as you have always used a subject-specific reference book to answer a specific question, targeted search tools can help you save time and pinpoint specific information quickly on the Web. Why attempt to pull a needle from a large haystack with material from all branches of knowledge when a specialized tool allows you to limit your search in

specific ways as it relates to the type of information being searched?

Yet this is exactly what many of those same people do when they use a general-purpose search engine or directory such as AltaVista or Yahoo! when there are far more appropriate search tools available for a specialized task.

Targeted Directories

Targeted directories are Web guides compiled by humans that focus on a particular specialized topic or area of information. They are generally compiled by subject matter experts whose principal concerns are quality, authority, and reliability of the resources included in the directory.

EXAMPLES OF TARGETED DIRECTORIES

Comprehensive subject coverage. Many of the pages included in targeted directories are available via general-purpose directories, but targeted directories are more likely to have comprehensive coverage of a particular subject area. In essence, they are “concentrated haystacks” where needles are more likely to be the size of railroad spikes, and much easier to find.

Up-to-date listings. People who compile and maintain targeted directories have a vested interest in keeping the directory up to date, as they are usually closely and personally associated with the directory by colleagues or other professionals in the field. Keeping the directory up to date is often a matter of honor and professional reputation for the compiler.

Listings of new resources. Since the compilers of targeted directories are often subject-matter experts in their field, they closely follow new developments in their fields. They also can rely on a network of other people in the field to monitor new developments and alert them when a new Web resource is available. Site developers are also motivated to inform targeted directories of new resources for the prestige factor of securing a listing. Finally, many focused directories are compiled by non-profit organizations, which are not subject to the occasional subtle pressure of bias from advertisers.

Focused Crawlers

Like targeted directories, focused crawlers center on specific subjects or topics.

EXAMPLES OF FOCUSED CRAWLERS

Vertical Portals (Vortals)

Vertical Portals (also known as “Vortals”) are mini-versions of general-purpose search engines and directories that focus on a specific topic or subject area. They are often made up of both a targeted directory and listings compiled by a focused crawler. Vortals are most often associated with “Business to Business” (B2B) sites, catering to both the information and commerce needs of particular industries or services. While many Vortals provide great information resources for searchers, their primary focus is usually on providing a virtual marketplace for the trade of goods and services. As such, Vortals can be excellent resources for researchers looking for current information on business concerns ranging from manufacturing to global trade.

How to Find Vortals

There are thousands of Vortals on all conceivable subjects. Rather than providing specific examples, here are two focused directories that specialize in Vortals.

Metasearch Engines

Metasearch engines submit queries to multiple search engines and Web directories simultaneously. Rather than crawling the Web and building its own index, a metasearch engine relies on the indices created by other search engines. This allows you to quickly get results from more than one general-purpose search engine.

While some searchers swear by metasearch engines, they are somewhat limited because they can only pass through a small set of the advanced search commands to any given engine. Searchers typically take a “least common denominator” approach that favors quantity of results over refined queries that might return higher quality results.

EXAMPLES OF METASEARCH ENGINES

Issues with Metasearch Engines

Metasearch engines attempt to solve the haystack problem by searching several major search engines simultaneously. The idea is to combine haystacks to increase the probability of finding relevant results for a search. The trap many people fall into is thinking that metasearch engines give you much broader coverage of the Web. Searchers reason that since each engine indexes only a portion of the Web, by using a metasearch engine, the probability of finding documents that an individual engine might have missed is increased. In other words, combining partial haystacks should create a much more complete, larger haystack.

In theory, this is true. In practice, however, you don’t get significantly broader coverage. Why? Because the metasearch engines run up against the same limitations on total results returned that you encounter searching a single engine. Even if search engines report millions of potential matches on your keywords, for practical reasons, all of the engines limit the total number of results you can see—usually between 200 and 1,000 total results. Results found beyond these arbitrary cutoff points are effectively inaccessible without further query refinement. Quite the opposite of searching whole haystacks with great precision, you’re actually searching only portions of partial haystacks with less precision!

Metasearch engines generally do nothing more than submit a simple search to the various engines they query. They aren’t able to pass on advanced search queries that use Boolean or other operators to limit or refine results.

Value-Added Search Services

Some Web search services combine a general-purpose search engine or directory with a proprietary collection of material that’s only available for a fee. These value-added search services try to combine the best of both worlds: free resources available on the Web, and high-quality information offered by reputable information publishers.

Many of these services evolved from models used by early consumer-oriented services like the Source, CompuServe, Prodigy, and, of course, today’s most popular closed information system, America Online (AOL). Unlike the consumer services, which require a subscription to the complete service to gain access to any of its parts, value-added search services on the Web tend to offer varying levels of access based on need and willingness to pay. Most offer at least some free information (sometimes requiring filling out a simple registration form first). The premium information offered by these services is generally first rate, and well worth the modest fees if the searcher is looking for authoritative, high-quality information.

EXAMPLES OF VALUE-ADDED SEARCH SERVICES

Alternative Search Tools

Most people think of searching the Web as firing up a browser and pointing it to a search engine or directory home page, typing keyword queries, and reading the results on the browser screen. While most traditional search engines and directories do indeed operate this way, there are a number of alternative search tools that both transcend limitations and offer additional features not generally available otherwise.

Browser Agents

Browser agents are programs that work in conjunction with a Web browser to enhance and extend the browser’s capabilities. While these tools focus on “search,” they work differently from traditional keyword-based search services. The tools attempt to automate the search process by analyzing and offering selected resources proactively. Others go beyond simple keyword matching schemes to analyze the context of the entire query, from words, sentences, and even entire paragraphs.

Browser agents can be very handy add-on tools for the Web searcher.

The downside to all of these tools is that they are stand-alone pieces of

software that must be downloaded, installed, and run in conjunction

with other programs. This can quickly lead to desktop clutter, and

potential system conflicts because the programs are not designed to

work together. The best strategy for the searcher is to sample a number

of these programs and select one or two that prove the most useful for

day-to-day searching needs.

Client-Based Search Tools

Client-based Web search utilities (sometimes called “agents” or “bots”) reside on your own computer or network, not on the Internet. Like other software, they are customizable to meet your personal needs. Because the software resides on your own computer, it can “afford” to be more computationally intensive in processing your search queries.

Client-based Web search utilities have a number of key features generally not found on Web-based search engines and directories.